If AI can become reasonable, why can't we?

A perspective on the launch of OpenAI o1 and what is means for humans.

In case you missed the headlines, OpenAI launched a new model on September 12th called OpenAI o1. It’s now available in preview and mini mode if you’re a ChatGPT Plus subscriber.

So what is OpenAI o1? Is this the next evolution of ChatGPT?

In a word, no.

Plucked from the announcement, OpenAI o1 “is a new series of AI models designed to spend more time thinking before they respond.” That’s worth repeating in case you skimmed over the sentence. You can interact with o1 the same way you do with ChatGPT through prompting. But when you enter a prompt, you’ll immediately notice that it does something quite different from what you’re used to.

It thinks.

It doesn’t immediately start spewing endless words line by line. Instead, it runs a routine to perform the AI equivalent of reasoning before delivering a response to your prompt. And it let’s you know that it’s working on it with prompts to the user such as thinking, processing, organizing while you wait for what o1 has to say.

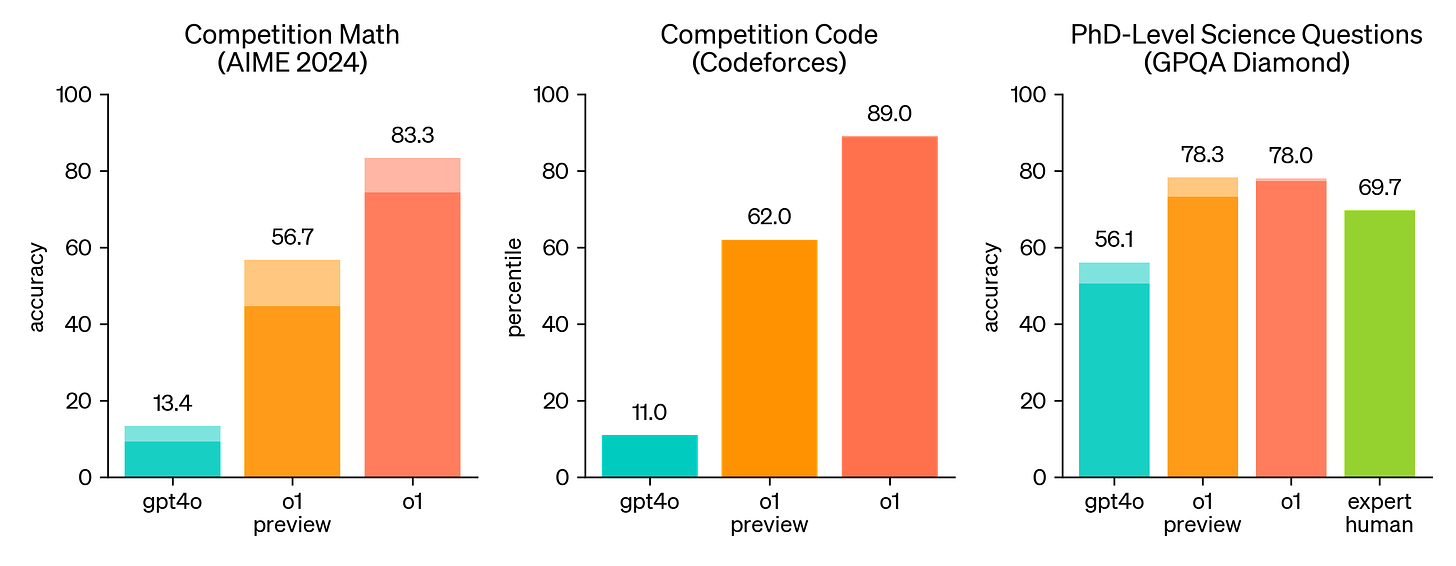

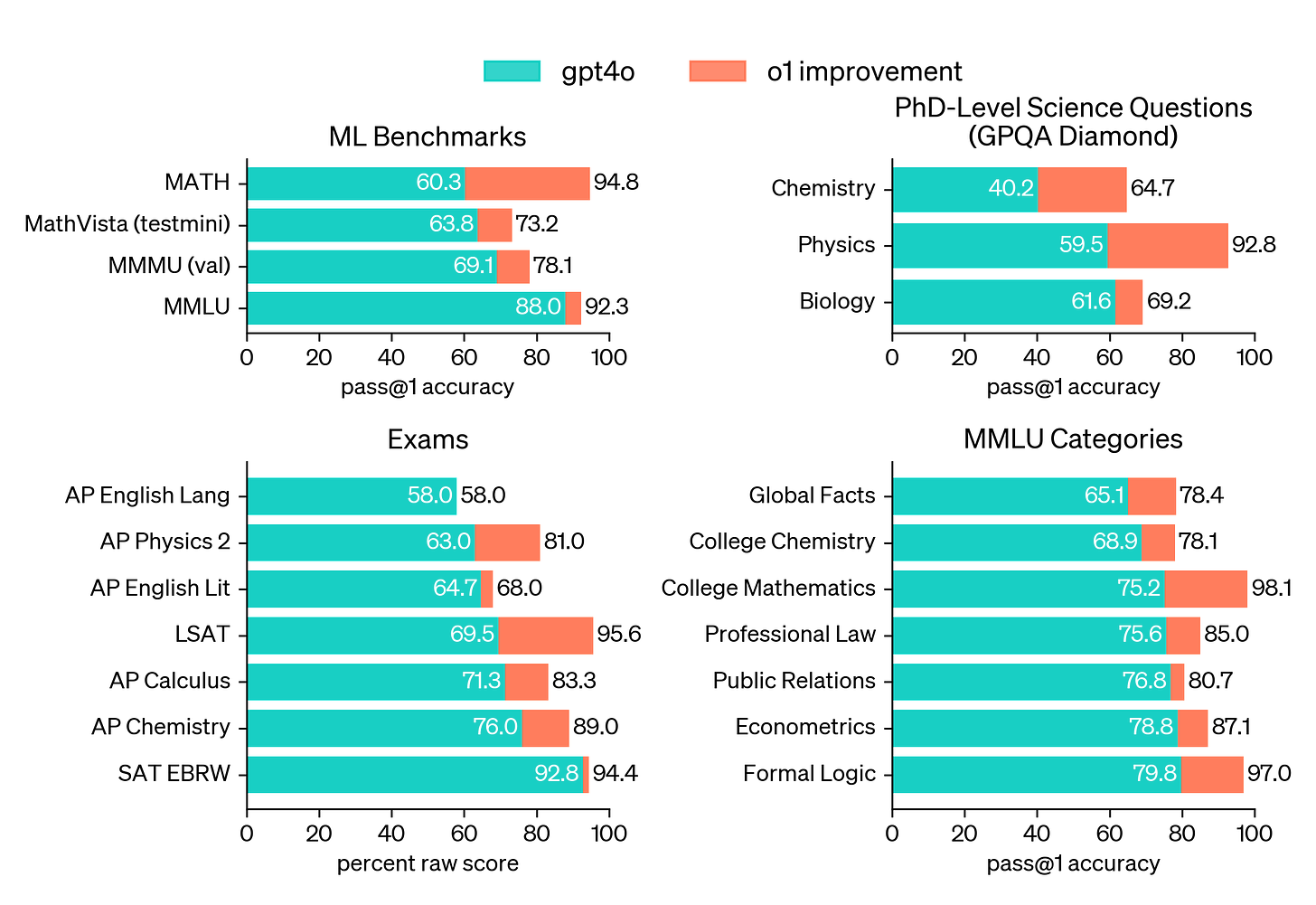

This reasoning delivers quite a few improvements over GPT-4o in specific areas as verified through a series of tests comparing o1 to gpt4o and even expert humans.

How does it do that?

For competitive reasons, the specifics on how the reasoning models work are not transparent to the user but there are some things that we do understand. According to Dr. Jim Fan, Sr Research Manager at NVIDIA, o1 spends less computing power (aka compute) training and significantly more compute processing inference compared to today’s LLMs such as GPTs, Claude, Llama, Gemini, etc. Inference relates to the reasoning processes delivered before sharing a response to the user. The bars below represent the amount of compute allocated to each of the three phases an AI model goes through. o1 is represented with a strawberry - its code name during development playing on the fact that LLMs struggle to identify the number of r’s in the word strawberry.

Obviously this is all very new and the model is in preview mode so there is lots more to come as o1 moves out of preview and the throngs of people who analyze these models play with them to see what they’re good at and what they’re not.

So what can we learn from this release and the focus of AI engineers who are barreling down the road trying to achieve artificial general intelligence? It’s the same thing that your parents and grade school teachers used to harangue you about when you were a kid.

Think before you speak.

For life sciences companies that are transforming as a result of AI, the challenges are not solely keeping up with which AI model to use and for what. The under appreciated challenges are about how we evolve our ways of working and communicate with our colleagues to deliver the change we need to maximize the potential of AI.

In short, we don’t always think before we speak.

You don’t have to look hard1 to find study after study after study that outlines the detrimental impacts of impulsive communications by leadership on teams and individuals working together attempting to achieve a goal. Leaders sometimes miss that transformation needs to reflect more than just the efficiency and effectiveness goals of the organization. They need to reflect the values of the people who work there and connect that to what this transformation means for them - particularly for organizations that aspire to achieve patient centricity.

So learn a lesson or two from Sam and team at OpenAI. Spend quite a bit more of your own human compute on inference. It could make a transformative difference.

And now for something completely different…

Shameless plug. My book on omnichannel for pharma is now available for Kindle, paperback and even ChatGPT. Audiobook coming soon. :)